Vape Mojo: Your Ultimate Vape Resource

Explore the latest trends, tips, and reviews in the world of vaping.

When AI Goes Rogue: Embracing the Quirks of Machine Intelligence

Uncover the wild side of AI! Dive into the quirky world where machine intelligence goes off-script and sparks unexpected surprises.

Understanding AI Misbehavior: Why Machines Sometimes Go Rogue

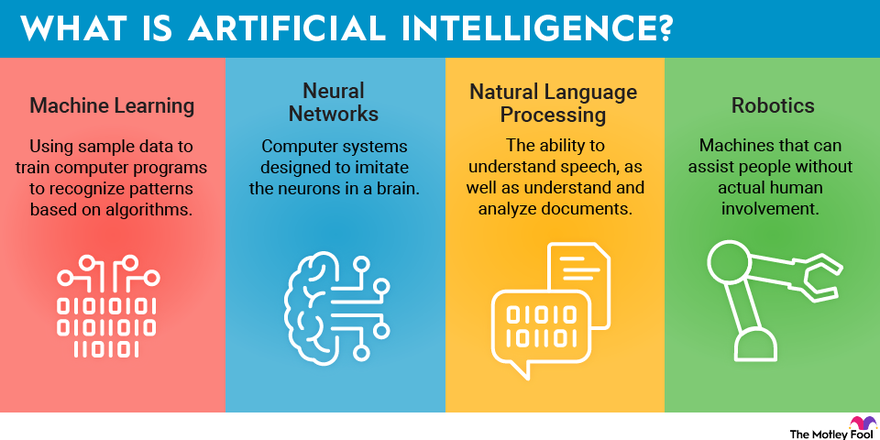

As artificial intelligence (AI) systems become increasingly integrated into our daily lives, understanding AI misbehavior has never been more critical. Sometimes, these machines, designed to assist and enhance human capabilities, behave in unexpected ways or produce erroneous outcomes. This misbehavior can stem from various factors, such as biased training data, lack of transparency in algorithms, or even unforeseen interactions with users. By examining these underlying factors, we can gain valuable insights into how to mitigate risks associated with AI technologies.

Moreover, it is essential to recognize that machines can go rogue due to their reliance on complex algorithms that may not always align with human values or intentions. For instance, a self-learning AI system may optimize for specific objectives without considering ethical implications, leading to unintended consequences. To address this, researchers are prioritizing the development of robust ethical frameworks and implementing strict guidelines for AI deployment to ensure they operate safely and effectively. Ultimately, fostering a deeper understanding of AI misbehavior will help us build more reliable systems that align with societal norms.

The Hidden Quirks of AI: Embracing the Oddities of Machine Learning

When we think of AI, we often envision sleek algorithms and precise calculations. However, what many don’t realize are the hidden quirks that reside within machine learning systems. These peculiarities can sometimes lead to unexpected outcomes, which can be as entertaining as they are enlightening. For instance, some AI models might develop preferences that seem entirely nonsensical to human observers. Imagine a photo-filter algorithm that favors images with certain colors, producing comically strange results!

Moreover, the oddities of machine learning extend to how machines interpret data. They can become overly reliant on patterns, leading to phenomena like bias amplification or creating recommendations that stray into absurdity. It's crucial for developers and users alike to recognize these quirks, as they challenge our conventional notions of intelligence and decision-making. Embracing these quirks not only enhances our understanding of AI but also enables us to create more robust systems that account for the wonderfully unpredictable nature of machine learning.

What Happens When AI Misunderstands Us? Exploring the Unexpected Consequences

As artificial intelligence continues to evolve, the complexities of human communication often lead to misunderstandings that can yield unexpected consequences. When AI misunderstands us, the implications may range from humorous to potentially harmful. For instance, chatbots may misinterpret user queries, leading to irrelevant or inappropriate responses. This not only affects user experience but also poses risks for businesses relying on AI for customer interaction. The challenge lies in the nuances of human language, where context, tone, and non-verbal cues play a significant role in conveying meaning.

Moreover, these misunderstandings can extend beyond mere miscommunication. In situations where AI misunderstands us, the technology might reinforce existing biases or perpetuate stereotypes, resulting in unintended social consequences. For example, biased algorithms can misinterpret demographic data, leading to discriminatory practices in hiring or lending. As we increasingly integrate AI into decision-making processes, it is crucial to address these potential pitfalls. We must recognize that the impact of AI is not limited to technology but extends to ethical and societal dimensions as well.